This week’s tragic Tesla incident – involving the deaths of two US men in a single vehicle collision involving a 2019 Tesla Model S in which neither passenger was sitting in the driver’s seat – has caused a stir about the safety of current autonomous technology.

Early reports suggested that the vehicle’s Autopilot function was active and a potential cause, but Tesla CEO Elon Musk has strongly denied those claims. Given our natural fear of anything new or successful it is far easier for us to question autonomous technology and Tesla than it is to question the actions of the drivers involved – but to do so is simply reckless.

No one deserves to lose their life for any driving mistake, but our response needs to be on refining driver behaviour and prioritising safety rather than joining the Tesla pile on.

Tesla’s Texas Tragedy

Federal investigators are still gathering “information” on Saturday’s fatal crash in Texas but an initial police statement suggested that Autopilot, the widely available precursor to “full self-driving,” may have been active and, if so, was being used inappropriately.

The Tesla was travelling at high speed when it failed to negotiate a curve and went off the roadway, crashing to a tree and bursting into flames, local television station KHOU-TV said. After the fire was extinguished, authorities located two occupants in the vehicle, with one in the front passenger seat and the other in the back seat, the report said.

“[Investigators] are 100-percent certain that no one was in the driver seat driving that vehicle at the time of impact,” Harris County Precinct 4 Constable Mark Herman said. “They are positive.”

Authorities have also served search warrants on Tesla to obtain and secure data from the vehicle, he said.

Can you trick a Tesla into Autopilot?

A concerning test from Consumer Reports has shown just how easy it is to trick a Tesla into Autopilot mode. The team simply attached a small weight to the steering wheel and moved over to the passenger side.

“In our evaluation, the system not only failed to make sure the driver was paying attention, but it also couldn’t tell if there was a driver there at all,” said Jake Fisher, CR’s senior director of auto testing, who conducted the experiment.

“Tesla is falling behind other automakers like GM and Ford that, on models with advanced driver assist systems, use technology to make sure the driver is looking at the road.”

CR engineers easily tricked our @Tesla Model Y this week so that it could drive on Autopilot, the automaker’s driver assistance feature, without anyone in the driver’s seat—a scenario that would present extreme danger if it was repeated on public roads. https://t.co/SWjKvGYFbK

— Consumer Reports (@ConsumerReports) April 22, 2021

There was initial speculation a similar method might have been employed by the men in the recent Texas incident, but according to Tesla vehicle data that’s not the case, making the cause of this crash even more peculiar.

Was Autopilot enabled in the Texas crash? Musk thinks not

Tesla CEO Elon Musk claims that the Autopilot feature wasn’t activated at the time of the crash. He has also acknowledged that the vehicle involved did not have full self-driving (FSD) capabilities – a $10,000 add-on feature that allows vehicles to self-park and automatically change lanes on highways.

“Data logs recovered so far show Autopilot was not enabled & this car did not purchase FSD,” said Musk in a tweeted reply to a Tesla enthusiast confused by the ordeal. “Moreover, standard Autopilot would require lane lines to turn on, which this street did not have.”

Your research as a private individual is better than professionals @WSJ!

Data logs recovered so far show Autopilot was not enabled & this car did not purchase FSD.

Moreover, standard Autopilot would require lane lines to turn on, which this street did not have.

— Elon Musk (@elonmusk) April 19, 2021

Tesla’s own website is also keen to point out that Autopilot and FSD capabilities are meant to help the onboard driver, not replace them.

“Autopilot and Full Self-Driving Capability are intended for use with a fully attentive driver, who has their hands on the wheel and is prepared to take over at any moment,” it said.

“While these features are designed to become more capable over time, the currently enabled features do not make the vehicle autonomous.”

The Tesla pile on

The issue was perhaps best summarised in a tweet from Twitter user @TeslaHunterX.

When people accidentally crash a Honda, it’s their fault. When someone misuses a tool & hurts themselves or someone else, it’s their fault.

When someone misused a Tesla and kills someone, it’s the Tesla’s and @elonmusk’s fault—not the fault of the one misusing the vehicle $tsla

— The Tesla Hunter (@TeslaHunterX) April 19, 2021

Obviously the user is a Tesla fanboy, but the point still remains – that many critics love to throw the boot in on Tesla. Sadly people die in road crashes all the time whether by drink driving, drugs, fatigue, mobile phone use or general driver distraction. It’s a reality of human error and poor behaviour.

Ignoring the media outrage and instead comparing actual data can help us paint a more accurate picture, and ultimately make global changes to significantly improve driver safety.

Accident Data – what do the numbers say?

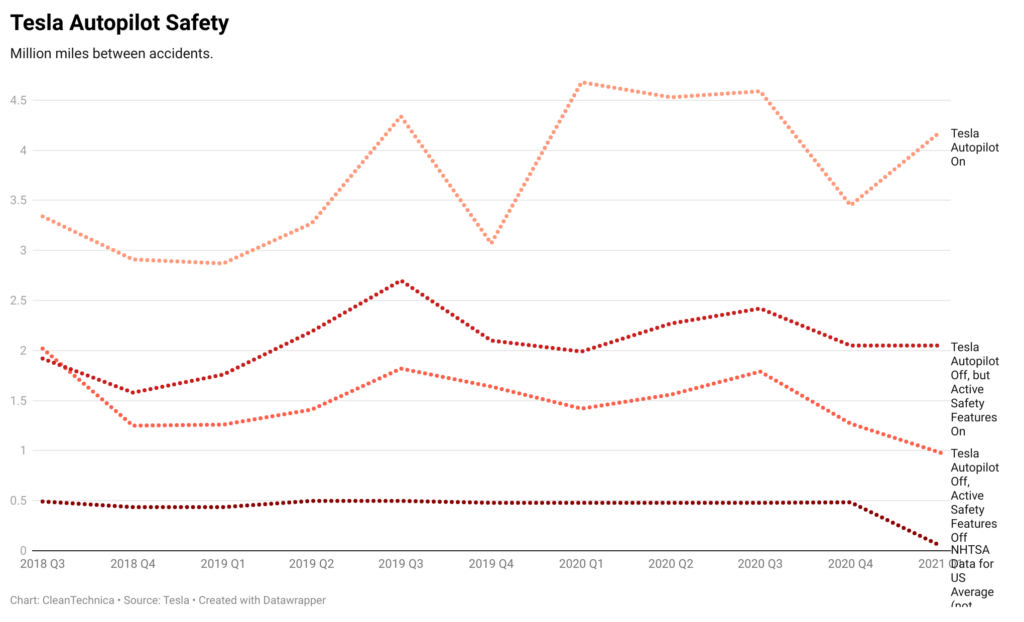

Tesla recently released its accident data for Q1 2021. Below is a quick recap:

- In the 1st quarter, we registered one accident for every 4.19 million miles driven in which drivers had Autopilot engaged.

- For those driving without Autopilot but with our active safety features, we registered one accident for every 2.05 million miles driven.

- For those driving without Autopilot and without our active safety features, we registered one accident for every 978 thousand miles driven.

Tesla noted that by comparison the National Highway Traffic Safety Administration’s (NHTSA) most recent data shows that in the United States there is an automobile crash every 484,000 miles. Moreover in 2020, an estimated 42,060 people died in motor vehicle crashes and 4.8 million were injured on American roads.

That represents an 8 percent increase over 2019, the largest year-over-year increase in almost a century. When you compare those numbers with the number of miles driven, the rate of fatalities rose 24 per cent, which is also the highest spike in almost a century.

In a nutshell

It’s hard to argue that Tesla vehicles with Autopilot engaged are less safe than vehicles without Autopilot. Blaming Tesla’s Autopilot and FSD for this week’s tragic incident only serves to create division when the automotive industry requires a unified approach to prioritising road safety.

The Australasian Fleet Management Association is committed to getting our members to purchase the newest and safest vehicles for their fleets.

Look for vehicles that have the latest safety features and automonous options available, and check out our safety explanatory videos for more information on how each feature works.

Nothing can fully replace a human driver for the moment but being in a newer vehicle could just save your life.